Journal of Image and Signal Processing

Vol.07 No.02(2018), Article ID:24317,9

pages

10.12677/JISP.2018.72008

Invariant Feature and Kernel Distance Metric Learning Based Person Re-Identification

Qi Liu1,2, Li Hou2, Zhangyou Peng1

1School of Communication & Information Engineering, Shanghai University, Shanghai

2School of Information Engineering, Huangshan University, Huangshan Anhui

Received: Mar. 15th, 2018; accepted: Mar. 28th, 2018; published: Apr. 4th, 2018

ABSTRACT

Pedestrian may vary greatly in appearance due to differences in illumination, viewpoint, and pose across cameras, which can bring serious challenges in person re-identification. A kernel distance metric learning algorithm of invariant feature is proposed for person re-identification in this paper. Firstly, an invariant feature composed of a concatenation of local maximal occurrence (LOMO) and feature fusion net (FFN) called LOMO-FFN is used to encode human appearance across cameras. Secondly, a gauss kernel distance metric learning algorithm called kernel canonical correlation analysis (KCCA) is applied to obtain an optimized human feature distance across cameras, based on the extracted feature representation. Experimental results have shown that the proposed algorithm effectively improves recognition rates on two challenging datasets (VIPeR, PRID450s).

Keywords:Person Re-Identification, Invariant Feature, Kernel Distance Metric Learning

基于不变特征和核距离度量学习的行人再识别

刘琦1,2,侯 丽2,彭章友1

1上海大学通信与信息工程学院,上海

2黄山学院信息工程学院,安徽 黄山

收稿日期:2018年3月15日;录用日期:2018年3月28日;发布日期:2018年4月4日

摘 要

跨摄像机行人因光照、视角、姿态的差异,会使其外观变化显著,给行人再识别的研究带来严峻挑战。文中提出不变特征的核距离度量学习算法进行行人再识别。首先,采用LOMO-FFN不变特征描述子,表示跨摄像机行人的外观;然后,采用KCCA高斯核距离度量学习算法,优化跨摄像机行人特征距离。在具有挑战的VIPeR和PRID450S两个公开数据集上进行仿真实验,实验结果表明所提出的行人再识别算法的先进性和有效性。

关键词 :行人再识别,不变特征,核距离度量学习

Copyright © 2018 by authors and Hans Publishers Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

1. 引言

近年来,智能视频监控在公共安全、商业活动、智慧交通、国防和军事应用领域中的需求日益增加,如:在机场、地铁、车站、银行、学校和军事设施等场所安装智能视频监控摄像机网络,用于安全自动无人监控,以有效确保国家设施和公众的安全。摄像机网络自动行人跟踪是智能视频监控最重要、最具挑战性的核心技术之一,已经成为模式识别、图像处理、计算机视觉等领域的重要研究课题。行人再识别,是实现摄像机网络自动行人跟踪的关键技术,直接影响着摄像机网络自动行人跟踪的准确率和鲁棒性。

行人再识别技术,是指让计算机识别不同摄像机下的行人是否是相同的身份,通过行人的外观去匹配不同摄像机下的行人。不同摄像机下的行人存在光照、视角、姿态等方面的差异,会使行人外观变化显著,如图1所示(上、下两行分别为行人再识别基准数据集VIPeR中的不同摄像机拍摄的行人图像,同一列为相同的行人),给行人再识别的研究带来严峻挑战。当前对行人再识别的研究主要集中两方面:特征提取和度量学习。目前已有很多特征类型应用于行人再识别中,如颜色 [1] [2] ,纹理 [3] [4] [5] [6] ,形状 [7] [8] ,全局特征 [1] [8] ,区域特征 [6] [9] ,分块特征 [3] [5] [10] 和语义特征 [11] [12] 。采用多特征融合去改善行人再识别的准确率和鲁棒性 [13] [14] [15] [16] [17] ,是行人再识别特征提取的主要研究方向。如:Gray和Tao [13] 提出融合区域特征(ELF)去处理跨摄像机行人视角的变化。该方法首先融合了RGB,YCbCr,HSV三种颜色空间的颜色特征,以及通过不同半径和尺度组合的Schmid和Gabor两种滤波器提取纹理特征。Yang等人 [14] 联合四种颜色空间(原始RGB,归一化rgb,l1l2l3和HSV)的显著颜色名称颜色描述子(SCNCD)和颜色直方图共同描述行人外观的颜色特征。Liao等人 [15] 联合HSV颜色直方图和尺度不变局域三元模式(SILTP)纹理特征描述子。Wu等人 [16] 将卷积神经网络(CNN)深度特征和手工提取的特征(RGB,HSV,YCbCr,Lab,YIQ颜色特征和Gabor滤波器提取的多尺度多方向纹理特征)进行有效的融合,形成更具辨识力的特征融合深度神经网络。为了减轻跨摄像机行人匹配时外观的显著变化问题,提高行人再识别的准确率。近些年来,距离度量学习 [18] - [23] 方法广泛应用于行人再识别技术中。距离度量学习,即:关注于学习一个优化的相似度/距离度量模型,通过学习一个线性变换,将数据从原始特征空间映射到新的特征空间,然后在这个新的特征空间中,实现跨摄像机同一目标的特征距离更近,不同

Figure 1. Human Examples on VIPeR Dataset

图1. VIPeR数据集的行人图像

目标的特征距离更远。马氏度量学习广泛应用于全局寻找特征空间变换的投影关系。如:Koestinger等人 [21] 提出基于特定标签等值约束的KISS度量(KISSME)方法进行行人再识别。Pedagadi等人 [22] 提出了无监督的主成分分析(PCA)和监督的局部费舍尔辨识分析(LFDA)降维相结合的低维流形度量学习框架用于行人再识别。Xiong等人 [23] 进一步提出了核LFDA(KLFDA)的方法进行行人再识别。Liao等人 [15] 提出判别度量学习,即:跨视图二次判别分析(XQDA),进行行人再识别。

因此,探索具有辨识力、并对视角、光照、姿态等具有不变性和鲁棒性的行人特征表示,以及优化跨摄像机行人特征距离的机器学习方法,是行人再识别研究亟待解决的关键问题。

针对上述关键问题的处理,本文提出的行人再识别算法流程如图2所示。主要由两部分组成:LOMO-FFN不变特征提取、KCCA核度量学习。首先从不同摄像机下的行人图像中提取2个不变的特征(LOMO、FFN),进而将LOMO和FFN两个特征向量级联在一起构成复合的不变特征(LOMO-FFN),以有效地表示不同摄像机下行人的外观。然后,基于LOMO-FFN不变特征去训练KCCA核度量学习模型,以获取跨摄像机每对行人优化的距离度量,作为跨视图行人最终的距离度量。

本文的主要贡献如下:

1) 提出了对光照、视角、姿态具有鲁棒性的不变特征描述子LOMO-FFN,以有效地表示行人的外观;

2) 采用高斯核距离度量学习算法KCCA,以优化跨摄像机行人的特征距离,减轻跨摄像机行人外观的显著变化问题。

2. LOMO-FFN不变特征提取

为了充分利用LOMO特征对光照和视角的不变性,以及FFN特征对行人姿态的不变性,本文将LOMO和FFN的特征描述子级联在一起,形成一个复合的不变特征,用LOMO-FFN表示,其特征向量维度由LOMO特征向量维度和FFN特征向量的维度之和组成。

2.1. LOMO特征描述子

LOMO联合HSV颜色特征和尺度不变局部三值模式(SILTP)纹理特征来描述行人的外观 [15] 。为了更好的处理不同摄像机视图光照的变化,将原始的行人图像首先通过多尺度同态算法 [24] 转化为同态图像后,再提取HSV颜色特征。尺度不变局部三值模式(SILTP),作为对光照具有不变性的纹理特征描述子,不仅对光强度变化具有不变性,而且对图像中的噪声具有鲁棒性 [25] 。LOMO特征提取图解如图3所示。

Figure 2. The Proposed Algorithm Flowchart

图2. 算法流程

Figure 3. Illustration of LOMO Invariant Feature Extraction [15]

图3. LOMO不变特征提取图解 [15]

采用10 × 10的滑动子窗口表示一幅行人图像的局部区域,重叠5个像素的步长。在每个子窗口提取8 × 8 × 8-bin联合HSV颜色直方图和2个尺度的SILTP纹理直方图,然后检查同一水平位置的所有子窗口,最大化每种模式(同一个直方图bin)的局部发生率。此外,采用3个尺度的金字塔表示,通过2 × 2局部池化,下采样原始行人图像,上述特征提取步骤重复进行。最后,将每个水平位置所有计算的局部最大的特征向量级联在一起,形成一幅行人图像最终的LOMO特征描述子。

2.2. FFN特征描述子

FFN联合深度特征和手工提取的颜色和纹理特征,形成一个特征融合的深度神经网络 [16] 。FFN特征提取图解FFN如图4所示。

FFN特征提取由2个子网络组成。第一个子网络在输入的行人图像中处理卷积、池化、激发神经元,以便提取卷积神经网络(CNN)特征,该特征被提取在第5个卷积层和池化层。第二个子网络从同一幅行人图像中提取RGB,HSV,LAB,YCbCr,YIQ颜色特征和16种Gabor纹理特征。每一个输入的行人图像分成18个水平条带,每个水平条带构建28个特征通道,每个特征通道由16维直方图向量表示。来自第二个通道手工提取的颜色和特征用于调整CNN特征的学习方向,以便产生它的补偿特征。最后,两个子网络在融合层被连接在一起,形成4096维FFN特征描述子。

Figure 4. Illustration of FFN Invariant Feature Extraction [16]

图4. FFN特征提取图解 [16]

3. KCCA核距离度量学习

KCCA通过最大化特征变量之间的相关性来构建特征子空间。通过核技巧将特征向量映射到高维子

空间: 。本文使用高斯径向基核函数如公式(1)所示:

(1)

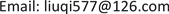

式中 表示特征描述子,s为高斯径向基核中尺度参数,在应用核技巧后,定义摄像机A,B中特征向量的核矩阵分别为

表示特征描述子,s为高斯径向基核中尺度参数,在应用核技巧后,定义摄像机A,B中特征向量的核矩阵分别为 、

、 ,如公式(2)所示:

,如公式(2)所示:

(2)

(2)

式中 、

、 分别表示摄像机A,B训练集样本对的核矩阵,

分别表示摄像机A,B训练集样本对的核矩阵, 表示摄像机A原型集(gallery set)和训练集(training set)样本对的核矩阵,

表示摄像机A原型集(gallery set)和训练集(training set)样本对的核矩阵, 表示摄像机B测试集(probe sets)和训练集(training set)样本对的核矩阵。

表示摄像机B测试集(probe sets)和训练集(training set)样本对的核矩阵。

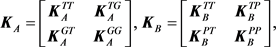

KCCA的目标是通过最大化如公式(3)的目标函数以获取投影权重向量 ,

, :

:

(3)

(3)

然后,应用投影权重向量 ,

, 分别对

分别对 ,

, 进行投影,获取摄像机A原型集(gallery set)和摄像机B测试集(probe sets)的投影后的特征描述子,如公式(4)所示:

进行投影,获取摄像机A原型集(gallery set)和摄像机B测试集(probe sets)的投影后的特征描述子,如公式(4)所示:

(4)

(4)

最后,计算公式(4) 、

、 中各对特征描述子(

中各对特征描述子( ,

, )间的余弦距离

)间的余弦距离 ,作为摄像机A原型集(gallery set)中测试样本

,作为摄像机A原型集(gallery set)中测试样本 和摄像机B测试集(probe sets)中测试样本

和摄像机B测试集(probe sets)中测试样本 的特征距离度量,如公式(5)所示:

的特征距离度量,如公式(5)所示:

(5)

(5)

4. 实验结果

我们在具有挑战性的2个公开数据集:VIPeR和PRID450S上,估计本文所提出的行人再识别算法的累计匹配特性(CMC)。VIPeR和PRID450S两个数据集的简要介绍如表1所示。我们随机选取行人数的一半作为训练样本集,另一半作为测试样本集。训练集样本用于学习投影权重向量 ,

, ,测试集样本用于衡量不同摄像机行人图像的特征距离。

,测试集样本用于衡量不同摄像机行人图像的特征距离。

在实验中,我们首先基于相同的特征(LOMO-FFN),比较线性CCA和非线性KCCA的CMC特性。然后,我们将本文所提出的行人再识别算法与近几年公开发表的行人再识别算法进行CMC特性比较。

4.1. VIPeR数据集

表2给出了VIPeR数据集基于相同特征(LOMO-FFN),分别使用CCA和KCCA的CMC性能比较结果。由表2可知,在VIPeR数据集中,采用KCCA的距离度量学习算法的识别率明显优于CCA算法。采用KCCA算法在VIPeR数据集的排序为1时识别率为31.3%,而采用CCA算法在VIPeR数据集的排序为1时识别率仅为12.7%。

表3给出了VIPeR数据集近几年公开发表的行人再识别算法的识别率。由表3可知,我们所提出的行人再识别算法在所比较的5种行人再识别算法中的识别率最优。

4.2. PRID450S数据集

表4给出了PRID450S数据集基于相同特征(LOMO-FFN),分别使用线性CCA和非线性KCCA的CMC性能比较结果。由表4可知,在PRID450S数据集中,采用非线性KCCA的距离度量学习算法的识别率明显优于线性CCA算法。采用非线性KCCA算法在PRID450S数据集的排序为1时的识别率为48.0%,而采用线性CCA在PRID450S数据集的排序为1时识别率仅为20.2%。

表5给出了PRID450S数据集近几年公开发表的行人再识别算法的识别率。由表5可知,我们所提出的行人再识别算法在所比较的5种行人再识别算法中的识别率最优。

Table 1. Brief Introduction of VIPeR and PRID450S Datasets

表1. VIPeR和PRID450S数据集简要介绍

Table 2 . Recognition Rate of CCA/KCCA on VIPeR. Only the Cumulative Matching Scores (%) at rank 1, 5, 10, 20 are listed

表2. VIPeR数据集CCA/KCCA的识别率(%),列出了排序为1,5,10,20的累积匹配分数

Table 3 . Recognition Rate of Person Re-Identification Published in Recent Years on VIPeR. Only the Cumulative Matching Scores(%) at rank 1, 5, 10, 20 are listed

表3. VIPeR数据集近几年公开发表的行人再识别算法的识别率(%),列出了排序为1,5,10,20的累积匹配分数

Table 4 . Recognition Rate of CCA/KCCA on PRID450S. Only the Cumulative Matching Scores (%) at rank 1, 5, 10, 20 are listed

表4. PRID450S数据集KCCA/CCA的识别率(%),列出了排序为1,5,10,20的累积匹配分数

Table 5 . Recognition Rate of Person Re-Identification Published in Recent Years on PRID450S. Only the Cumulative Matching Scores (%) at rank 1, 5, 10, 20 are listed

表5. PRID450S数据集近几年公开发表的行人再识别算法的识别率(%),列出了排序为1,5,10,20的累积匹配分数

5. 结论

本文提出了基于不变特征和核度量学习的行人再识别算法。采用不变特征LOMO-FFN去训练高斯核距离度量学习模型KCCA进行行人再识别。在具有挑战的VIPeR和PRID450S两个行人再识别数据集上的实验结果展示了我们所提出的行人再识别算法的优越性。

基金项目

国家自然科学基金(61704161),安徽省教育厅自然科学研究项目(KJHS2016B03),黄山学院横向科研项目(hxkt20170059),黄山学院校地合作项目(2017XDHZ021)。

文章引用

刘 琦,侯 丽,彭章友. 基于不变特征和核距离度量学习的行人再识别

Invariant Feature and Kernel Distance Metric Learning Based Person Re-Identification[J]. 图像与信号处理, 2018, 07(02): 65-73. https://doi.org/10.12677/JISP.2018.72008

参考文献

- 1. Xiong, F., Gou, M., Camps, O. and Sznaier, M. (2014) Person Re-Identification using Kernel-Based Metric Learning Methods. European Conference on Computer Vision, Zurich, 6-12 September 2014.

- 2. Jobson, D.J., Rahman, Z.U. and Woodell, G.A. (1997) A Multiscale Retinex for Bridging the Gap between Color Images and the Human Observation of Scenes. IEEE Transactions on Image Processing, 6, 965-976. https://doi.org/10.1109/83.597272

- 3. Liao, S., et al. (2010) Modeling Pixel Process with Scale Invariant Local Patterns for Background Subtraction in Complex Scenes. IEEE Conference Computer Vision and Pattern Recognition, Istanbul, 23-26 August 2010. https://doi.org/10.1109/CVPR.2010.5539817

- 4. Zeng, M., et al. (2015) Efficient Person Re-Identification by Hybrid Spatio-gram and Covariance Descriptor. IEEE Conference on Computer Vision and Pattern Recognition, Boston, 7-12 June 2015.

- 5. Ma, B., Su, Y. and Jurie, F. (2014) Covariance Descriptor Based on Bio-Inspired Features for Person Re-Identification and Face Verifica-tion. Image and Vision Computing, 32, 379-390. https://doi.org/10.1016/j.imavis.2014.04.002

- 6. Wang, W., et al. (2016) Learning Patch-Dependent Kernel Forest for Person Re-Identification. IEEE Winter Conference on Applications of Computer Vision, Lake Placid, 7-9 March 2016. https://doi.org/10.1109/WACV.2016.7477578

- 7. Cheng, E.D. and Piccardi, M. (2006) Matching of Objects Moving across Disjoint Cameras. IEEE International Conference on Image Processing (ICIP), Atlanta, 8-11 October 2006. https://doi.org/10.1109/ICIP.2006.312725

- 8. Van De Weijer, J. and Schmid, C. (2006) Coloring Local Feature Extraction. European Conference on Computer Vision (ECCV), Graz, 7-13 May 2006. https://doi.org/10.1007/11744047_26

- 9. Zhao, R., Ouyang, W. and Wang, X. (2014) Learning Mid-Level Filters for Person Re-Identification. IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 23-28 June 2014. https://doi.org/10.1109/CVPR.2014.26

- 10. Li, W. and Wang, X. (2013) Locally Aligned Feature Transforms across Views. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Oregon, 23-28 June 2013. https://doi.org/10.1109/CVPR.2013.461

- 11. Geng, Y., et al. (2015) A Person Re-Identification Algorithm by Exploiting Re-gion-Based Feature Salience. Journal of Visual Communication and Image Representation, 29, 89-102. https://doi.org/10.1016/j.jvcir.2015.02.001

- 12. Yang, Y., et al. (2016) Metric Embedded Discriminative Vocabulary Learning for High-Level Person Representation. Association for the Advancement of Artificial Intelligence, Phoenix,12-17 February 2016.

- 13. Shi, Z., et al. (2015) Transferring a Semantic Representation for Person Re-Identification and Search. IEEE Conference on Computer Vision and Pattern Recognition, Boston, 7-12 June 2015. https://doi.org/10.1109/CVPR.2015.7299046

- 14. Shen, Y., et al. (2015) Person Re-Identification with Correspondence Structure Learning. IEEE International Conference on Computer Vision, Santiago, 11-18 December 2015. https://doi.org/10.1109/ICCV.2015.366

- 15. Zhao, R., Ouyang, W. and Wang, X. (2013) Unsupervised Salience Learning for Person Re-Identification. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Oregon, 23-28 June 2013. https://doi.org/10.1109/CVPR.2013.460

- 16. Mignon, A. and Jurie, F. (2012) PCCA: A New Approach for Distance Learning from Sparse Pairwise Constraints. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, 16-21 June 2012. https://doi.org/10.1109/CVPR.2012.6247987

- 17. Ma, B., Su, Y. and Jurie, F. (2012) BiCov: A Novel Image Representation for Person Re-Identification and Face Verification. British Machine Vision Conference (BMVC), Guildford, 3-7 September 2012, 57.1-57.11. https://doi.org/10.5244/C.26.57

- 18. Belongie, S., Malik, J. and Puzicha, J. (2002) Shape Matching and Object Recognition Using Shape Contexts. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24, 509-512. https://doi.org/10.1109/34.993558

- 19. Wang, X., et al. (2007) Shape and Appearance Context Modeling. IEEE International Conference on Computer Vision (ICCV), Rio de Janeiro, 14-20 October 2007. https://doi.org/10.1109/ICCV.2007.4409019

- 20. Cheng, D.S., et al. (2011) Custom Pictorial Structures for Re-Identification. British Machine Vision Conference (BMVC), Dundee, 29 August-2 September 2011. https://doi.org/10.5244/C.25.68

- 21. Bazzani, L., et al. (2013) Symmetry-Driven Accumulation of Local Features for Human Characterization and Re-Identification. Computer Vision and Image Understanding, 117, 130-144. https://doi.org/10.1016/j.cviu.2012.10.008

- 22. Guo, Y., et al. (2008) Matching Vehicles under Large Pose Transformations Using Approximate 3D Models and Piecewise MRF Model. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, Alaska, 24-26 June 2008.

- 23. Layne, R., Hospedales, T. and Gong, S. (2012) Person Re-Identification by Attributes. British Machine Vision Conference (BMVC), Guildford, 3-7 September 2012, 24.1-24.11. https://doi.org/10.5244/C.26.24

- 24. Gray, D. and Tao, H. (2008) Viewpoint Invariant Pedestrian Recognition with an Ensemble of Localized Features. European Conference on Computer Vision (ECCV), Marseille, 12-18 October 2008, 262-275.

- 25. Yang, Y., et al. (2014) Salient Color Names for Person Re-Identification. European Conference on Computer Vision (ECCV), Zurich, 6-12 September 2014, 536-551.

- 26. Liao, S., Hu, Y., Zhu, X. and Li, S.Z. (2015) Person Re-Identification by Local Maximal Occurrence Representation and Metric Learning. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, 7-12 June 2015. https://doi.org/10.1109/CVPR.2015.7298832

- 27. Wu, S., et al. (2016) An Enhanced Deep Feature Representation for Person Re-Identification. IEEE Winter Conference on Applications of Computer Vision, Lake Placid, 7-9 March 2016. https://doi.org/10.1109/WACV.2016.7477681

- 28. 魏永超, 陈锋, 庄夏, 傅强. 基于不变角度轮廓线的三维目标识别[J]. 四川大学学报, 2017, 54(4): 759-763.

- 29. Davis, J.V., et al. (2007) Information-Theoretic Metric Learning. International Conference on Machine Learning, Corvallis, 20-24 June 2007. https://doi.org/10.1145/1273496.1273523

- 30. Zheng, W.S., Gong, S. and Xiang, T. (2011) Person Re-Identification by Probabilistic Relative Distance Comparison. IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, 20-25 June 2011.

- 31. Li, Z., et al. (2013) Learning Locally-Adaptive Decision Functions for Person Verification. IEEE Conference on Computer Vision and Pattern Recognition, Portland, 23-28 June 2013. https://doi.org/10.1109/CVPR.2013.463

- 32. Koestinger, M., et al. (2012) Large Scale Metric Learning from Equivalence Con-straints. IEEE Conference on Computer Vision and Pattern Recognition, Providence, 16-21 June 2012. https://doi.org/10.1109/CVPR.2012.6247939

- 33. Pedagadi, S., et al. (2013) Local Fisher Discriminant Analysis for Pedestrian Re-Identification. IEEE Conference on Computer Vision and Pattern Recognition, Portland, 23-28 June 2013. https://doi.org/10.1109/CVPR.2013.426

- 34. Liu, X., et al. (2015) An Ensemble Color Model for Human Reidentification. IEEE Winter Conference on Applications of Computer Vision, Waikoloa, 6-9 January 2015.